Thoughts on LLM use cases

As we approach the two-year mark of the AI hype initiated by the release of ChatGPT in November 2022 (seems like so long ago…), nearly every person with internet access has an opinion on whether large language models are unoriginal or powerful enough to replace us. Countless studies have enumerated throngs of industries which they proclaim will be upended by the AI revolution - marketing, journalism, wealth management, legal services - but they never seem to point to specific use cases of LLMs on the prompt-able level.

This article is not about my opinion that LLMs are vastly overhyped, although I do believe that they are much less impressive in the context of the past 15 years of rapid progress in artificial intelligence. AI systems were already firmly integrated into our personal lives, from face and eye fingerprinting, to social media and content recommendations, to navigation applications.

This article is about my opinions on how current effective use cases of large language models can be categorized. I believe LLMs, as they stand, are useful for six general areas: search, administration, debugging, interfacing, elementary teaching, and language. It is also important to note the main drawbacks of LLMs that reduce their effectiveness in these and other areas: hallucination, logical reasoning, and personal data requirements.

Note: Below, “LLMs” refers to my general impression of LLMs and their use in applications, whereas “ChatGPT” refers to my personal experience with ChatGPT. This is because I have the most experience with ChatGPT, although I do think the others I’ve used (LLaMa, GPT-J, GPT4All Falcon, Dolly, Perplexity, Claude, Bard, Copilot) have similar use cases and drawbacks.

search

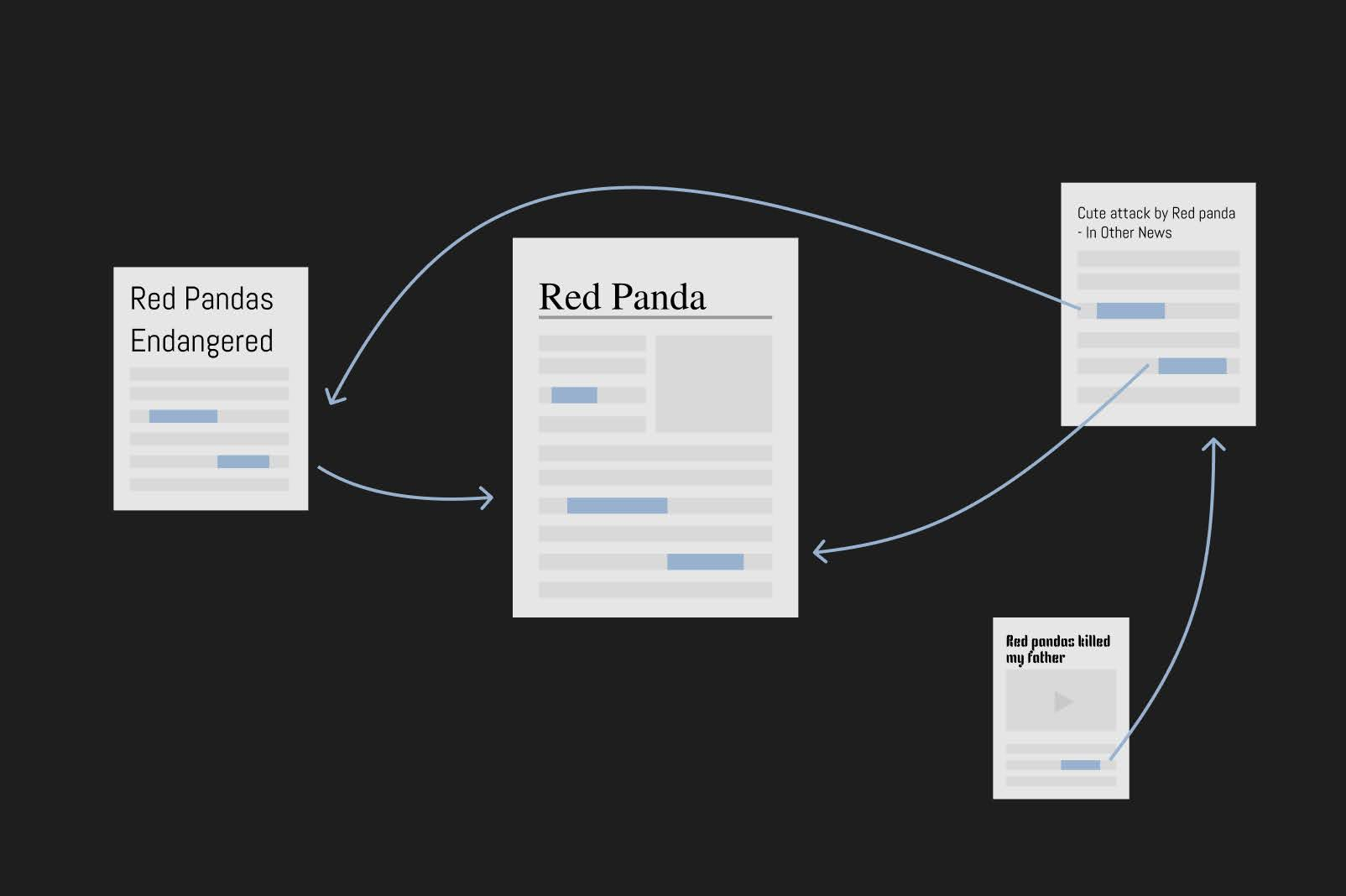

It wasn’t always the case that advanced natural language processing techniques were used in search engines. When you Google a question, most of the resulting links will contain largely text, but Google’s original PageRank algorithm from 1996 for returning relevant results didn’t really understand the content of those results or do complicated comparison to the content of your question - their big technological leap was instead gleaning information mainly from the numbers of links between webpages (i.e., that pages with more links to them are probably more informative).

PageRank promotes webpages with more links to them, indicated here by the size of the page.

Image thanks to George Chemmala

But it’s been over a decade since web search companies started using natural language processing techniques to actually look at the content of the results or the search in some form (e.g. whether you put a “not” in your search). Long before ChatGPT, big search companies were using language models to enhance the quality of search results. LLMs represented a huge step forward in this approach, “understanding language” better than ever before on tens or hundreds of benchmarks. This, I believe, is a legitimately significant use case of LLMs, and probably represents much more relevant search results than before, especially for specific or confusing queries - you can actually make a search with a conversational query. LLM-powered search has also come to research databases (cf. bibliometric analysis connecting different biological fields to identify potential therapies), product recommendations, job boards, and through images (on the web or in your camera roll).

Caught up in the AI hype, one begins to wonder whether “search” as a functionality is the wrong question, and whether all the capability Google has to understand questions and answers could just enable LLMs to directly produce text responses to your search queries, as if asking an expert. Already, many use ChatGPT as a search engine over Google, and at Google IO this year, the company demonstrated the search page as an AI-generated overview answering your question, with a separate “Web” tab, just like “News” and “Images”, showing you the “plain Google” page of links relevant to your question. However, this ignores the fact that most people use Google search to actually search for specific pages, files, images on the web, not just abstract text.

Moreover, LLMs still struggle (and, by their construction, will probably continue to struggle) with distinguishing fact from fiction, a phenomenon called hallucination. You can ask Gemini to fact-check itself, which helps; you can ask ChatGPT to think carefully and explain its reasoning and judge whether what it said is true, which helps, but ultimately they don’t have a sense of true or false. Maybe this is an unfair criticism because we live in a complex world where statements are often not completely true or false, but current LLMs are in practice actually hindered by this property.

People make this out to be a huge deal for disinformation, especially with regard to elections, but in my opinion AI safety experts at Anthropic and such are doing a good job of preventing that, and I’m also a worried that part of the effect is that governments will interfere more with the free spread of information on the Internet. Big AI companies are also doing a pretty good job of preventing exploits along the lines of “how do I build a bomb”, “what’s x’s home address”, and plenty of other dangers. I think hallucination most hinders LLMs’ ability to handle complex technical questions - in my experience, ask it to prove a mathematical theorem and unless the proof is very well-known, it will probably come up with something that sounds somewhat plausible but is not a complete or entirely correct argument.

administration

One of my biggest personal use cases for ChatGPT hinges on its ability to write “reasonable”-sounding text, even if it is somewhat bland and generic. Generally, I am referring to text that’s one part content to three parts filler as ‘administration’ because I think this use case includes not just job applications but also a lot of template-oriented bureaucracy - understanding and writing, for example, legal forms and contracts, tax documentation, and other reports. I think for the time being, this use case presents the greatest commercial opportunity, before AI systems become a lot better at interfacing with logical algorithms or handling a lot of sensitive user-specific context in a secure way, and indeed quite a few startups are already making inroads into these domains.

I’ve found this personally useful for correcting professional emails and editing cover letters, with prompts like “make this sound less awkward” or “for a student with x and y experiences, answer the following question in 1-2 paragraphs” to get me started. I tend to find starting the most difficult part of applications and projects, and ChatGPT has actually greatly helped me on this psychological level.

debugging

As LLMs are pretty much trained on the whole Internet, they know the solutions to most of the tech problems, coding issues, and little annoying bugs that have ever been encountered, and are extremely helpful for suggesting solutions to these issues. This also includes little annoying bugs in life, like fixing a sink or caring for a particular plant. In my experience, this doesn’t, however, extend to much creativity in coming up with technical innovations or working out solutions to logical, multi-step technical challenges where every piece is accurate, or even when debugging issues with new technologies that don’t have decently sized existing online support documentation. Without complex prompting strategies, LLMs don’t inherently perform formal logical reasoning, and they generally struggle with tasks like math that require multi-step reasoning. This use case is pretty limited in the actual scope of the technology, but is improving in quality as LLMs become trained on larger codebases designed for these tasks.

interfacing

Although LLMs are by default limited to their training data, both in grammar and in breadth of content, they can be finetuned to be able to interface with existing knowledge bases or classical AI systems to great effect. For example, the Wolfram plugin for ChatGPT allows ChatGPT to retrieve the results of executing code in the Wolfram language, which is very good at factual knowledge, symbolic math, and visualization - greatly complementing ChatGPT’s weaknesses. Similarly, LLMs can interface with other systems to syntax-check generated code or execute instructions in task languages to control smart home appliances or smart car navigation. A large component of this potential capability lies in retrieving information from proprietary knowledge bases like customer service manuals or guided tour information to provide better user experiences in either of those situations.

Using this capability is where the potential power of the long-desired “AI personal assistant” becomes apparent. LLM assistants, besides their obvious uses of translating languages and answering questions, could interface with applications to schedule events in your calendar, send emails in your writing style, or play songs from your Spotify about whatever thing you’re pointing your phone camera at. This is the idea behind new products like the Rabbit R1 and the Humane AI Pin, and in the AI world, these small LLMs that are trained to interface with one application, language, or topic are called “agents”.

Current versions of this idea are working through the technical challenges that come with interfacing with so many different programs, but the larger ethical issue here is that for an AI assistant to be truly helpful, it has to know everything about you. Think of a human personal secretary - they should know your schedule and preferences in order to make informed decisions in your stead, and the same personal data requirements would apply to an algorithm. The trick is that that algorithm is owned by a company, which will then want to use your data to improve the algorithm or to recommend actions and products for you to spend money on. And either way, the storage of that data is a massive security concern. These are the same problems with existing super-targeted recommendation algorithms, amplified by the scale of the data that’s necessary for the “AI personal assistant” idea to work.

elementary teaching

LLMs may not be great at answering technical questions that require logical reasoning, but if you have questions like “how does x thing work again”, they can be very good. And for most teaching through at least a middle school level, ChatGPT does well - in fact, OpenAI is working on a partnership with Khan Academy and some school districts on the Khanmigo teaching assistant, which can create assignments for students and offer feedback (in a judgment-free manner). That being said, we are a long way away from the dream of individualized tutors that know how much you know and lead you along the right intuitions and correct your mistakes. In addition, LLMs can’t handle neurodivergent people, social learning, or controversial or difficult topics in any meaningful way yet, so they will not be replacing human teachers any time soon.

language

Finally, LLMs are language models, so an LLM can be a dictionary and thesaurus and Grammarly and Duolingo all in one. I find this especially good for questions like “what’s that word that means x” and “what’s the name of this plant” and “how do you get the point across of x in y language”. You can very much use them to converse and learn languages, since the biggest LLMs tend to be multilingual. Understanding and communicating about tricky language topics is the original use case for language models, and represents many of the standards they were developed against (e.g., the Winograd Schema Challenge), so modern LLMs pretty much crush any language-related challenge you throw at them. Multimodal and timing-related challenges like having an LLM be part of a conversation are still awkward, but I’m confident that within a couple years we’ll have them listening to multiple people at once, butting in when appropriate, and understanding and producing inflection and fragments fluently.

I will be curious to see the direction of LLMs in the next two years. In the future:

- Maybe models will just continue to grow larger and more data-hungry and that will solve the issues with hallucination and logical reasoning.

- Maybe dedicated AI hardware will proliferate and enable plenty of small, specialized LLMs for many different use cases that can all be run locally and therefore don’t take personal data to continue training on.

- Maybe these use cases will develop, and the unsuccessful ChatGPT wrapper-businesses will be weeded out once AI funding falls a little, and models’ capabilities will not really change until the next deep technical development, like new model architectures.

Maybe some combination of the above. For now, though, large language models remain saddled with their share of problems and limitations.